Enhancing Infrastructure Management with Artificial Intelligence

Introduction and Article Outline

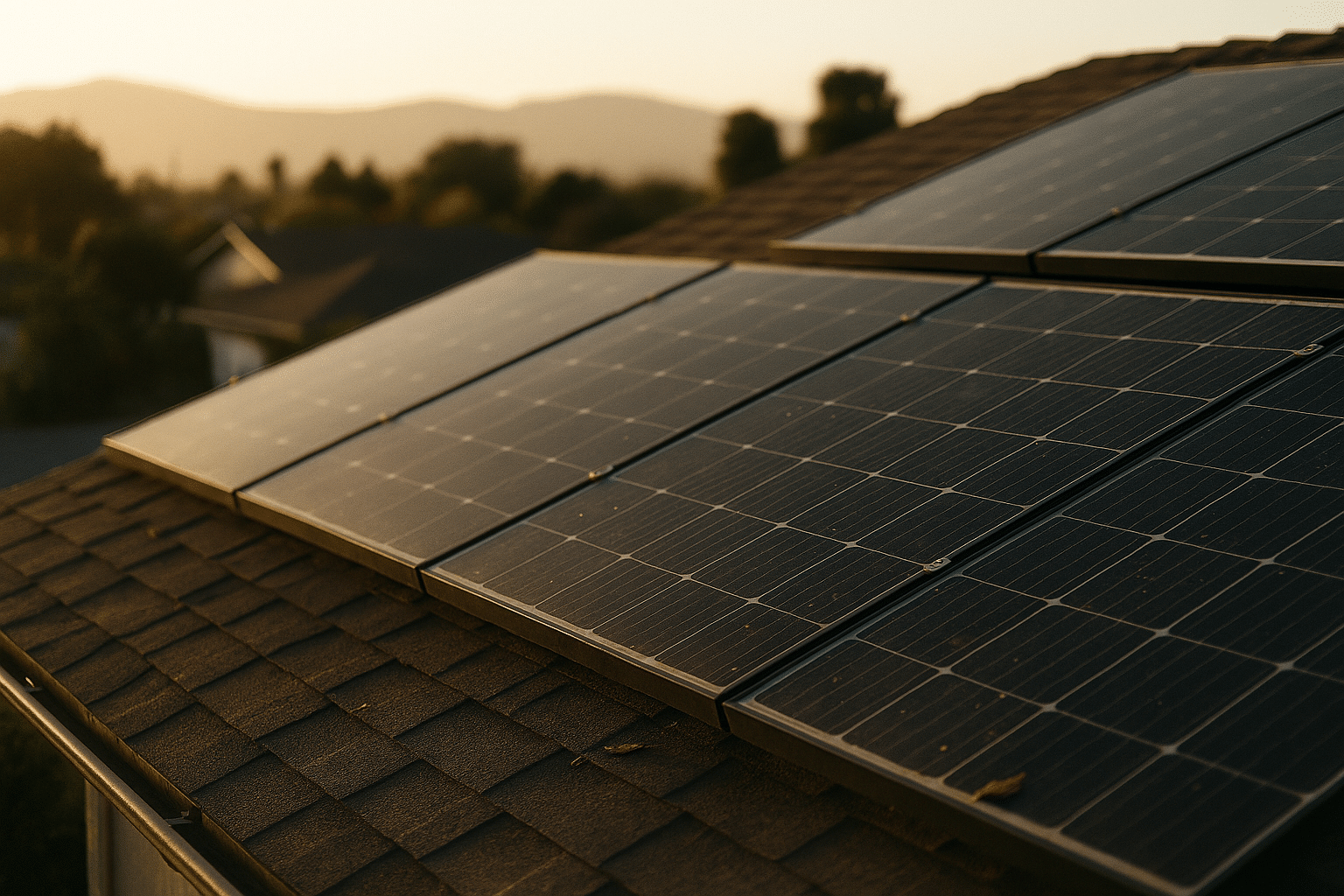

Infrastructure is a city’s circulation system: pipes and power lines carry what communities need, roads and rails move people and goods, and hidden control rooms balance supply with demand. Artificial intelligence is changing how all of this is coordinated, helping planners and operators act faster and with more confidence. Rather than chasing emergencies, teams can anticipate issues, automate repetitive work, and tune services to what citizens actually experience on the ground. This article explores three pillars that, together, elevate infrastructure management: automation, predictive analytics, and the broader framework of smart cities. You will find practical steps, comparative insights, and examples that illustrate tangible benefits without glossing over risks or constraints. Think of it as a field guide for moving from pilots to durable practice.

Outline of the article:

– The role of automation in fieldwork, control rooms, and service workflows, with measurable impacts on cost, time, and safety.

– Predictive analytics methods that turn sensor streams and logs into early warnings and optimized maintenance schedules.

– Smart-city integration that connects assets, data, and policies, balancing performance with privacy, security, and equity.

– Implementation roadmap, risk management, and the metrics that matter when justifying investment and scaling solutions.

Why this matters now is straightforward: infrastructure networks face mounting stress from aging assets, climate volatility, and budget constraints. Traditional operating models struggle when storm patterns shift, energy loads fluctuate quickly, or traffic rebounds unpredictably. AI-supported methods offer a way to adapt without rebuilding everything at once. They help detect leaks before water is lost, smooth bus headways without adding vehicles, and schedule substation inspections based on condition rather than fixed calendars. The goal is not automation for its own sake, but reliable services, safer operations, and prudent use of public funds.

Automation: Streamlining Field Operations and Control Rooms

Automation in infrastructure management spans from work order routing to closed-loop control in plants and substations. In practice, routine activities consume a large share of staff time: logging inspection notes, cross-checking inventory, validating permits, and dispatching crews. Software agents can triage these tasks, standardize inputs, and flag exceptions for human review. In control rooms, automation can handle frequent, low-variance adjustments—such as valve modulation within safety bands or signal timing plans matched to observed flows—while operators focus on atypical conditions and planning. This division of labor improves responsiveness and reduces the cognitive load that leads to avoidable errors.

Observed benefits, reported across utilities and transport agencies, frequently include shorter cycle times and fewer manual touches. For example, automated triage of work requests can cut intake-to-dispatch intervals by minutes or hours, which accumulates to meaningful availability gains over a month. Document generation and data validation scripts reduce rework and discrepancies that otherwise ripple into procurement or compliance headaches. In industrial settings, supervisory systems that enforce guardrails can prevent process drift, cutting the likelihood of out-of-spec output. While exact figures vary by context, case experiences commonly note double-digit percentage reductions in administrative effort and measurable drops in minor incidents tied to manual handling.

Comparing approaches helps frame choices:

– Rules-first automation applies deterministic logic and is easy to audit; it works well for stable processes with clear thresholds.

– Learning-augmented automation adapts to patterns and anomalies; it suits variable environments but requires careful monitoring.

– Human-in-the-loop designs blend both, keeping staff in control while delegating repetitive substeps.

Infrastructure operators often start with rules-first changes—think standardized forms that auto-populate based on asset IDs—and graduate to learning-based components as data quality improves. A practical path might include automated scheduling that considers crew skills and travel time; digital checklists that nudge compliance; and control policies that can revert to manual mode instantly. Guardrails matter: set clear rollback procedures, track override frequency, and log decisions for post-event review. When teams trust the system to handle the routine—and the system “knows when to stop”—automation becomes a safety net rather than a black box.

Predictive Analytics: From Sensor Data to Actionable Foresight

Predictive analytics converts raw signals—vibration on a pump, pressure in a pipe, harmonics on a feeder, weather near a culvert—into probabilities and lead times. The payoff is straightforward: shift maintenance and operations from reactive or calendar-based to condition-based. That shift saves parts, reduces truck rolls, and avoids downstream service disruptions. A typical pipeline includes data ingestion from historians and log files, cleaning and alignment across different sampling rates, feature engineering that captures trends and cyclic behavior, model training with ground-truth events, and deployment with thresholds geared to lead time and false-alarm tolerance. Feedback loops retrain models as assets age or operating regimes change.

Model choices vary by problem:

– Time-series forecasting estimates loads and flows to pre-position resources or adjust schedules.

– Classification models score failure risk or leak likelihood at the asset level, prioritizing inspections.

– Anomaly detection highlights behavior not seen in the training window, a useful early signal where labels are scarce.

– Optimization layers turn predictions into decisions, picking which assets to service under budget and crew constraints.

Consider a water network: correlating transient pressure drops with temperature and demand patterns can surface likely leak zones, guiding acoustic surveys to a few street segments rather than entire neighborhoods. In power distribution, transformer health indices—derived from temperature cycles, load profiles, and oil-related metrics when available—can rank units by risk so that the limited replacements target the most vulnerable. Transit systems can use dwell-time and headway predictions to adjust dispatching in near real time, smoothing passenger experience with minimal added cost. Documented programs in these domains often report reductions in unplanned downtime on the order of 10–20% and more efficient use of maintenance hours, though outcomes depend on data coverage and operational discipline.

Comparisons clarify expectations. Preventive maintenance is simple and predictable but can over-service healthy equipment. Predictive maintenance promises better timing but requires investment in sensors, integration, and model management. Hybrid strategies—running low-cost assets to failure while monitoring high-value ones closely—frequently deliver balanced results. To succeed, define performance metrics up front: false-alarm rate, average lead time, cost per averted incident, and service-level impacts. Pair those with governance basics: data lineage records, model versioning, and clear ownership for alerts. Predictive analytics is not only about algorithms; it is a management practice that ties data, expertise, and accountability into a repeatable loop.

Smart Cities: Integrating Systems, Data Governance, and Equity

Smart-city programs knit together assets and services so that the whole is more responsive than its parts. The vision is pragmatic: traffic signals coordinate with buses, storm drains talk to weather feeds, and street lighting adapts to pedestrian activity while conserving energy. Achieving this requires technical interoperability, secure data exchange, and policies that reflect community priorities. A layered architecture is helpful: devices and sensors at the edge; reliable communications; platforms that normalize data; analytics that produce alerts or setpoints; and application layers for operations and public information. Each layer must be replaceable without collapsing the stack, which argues for modular interfaces and open, well-documented schemas.

Integration choices carry trade-offs:

– Centralized hubs simplify oversight and analytics but concentrate risk and can become bottlenecks.

– Federated or mesh designs increase resilience and local autonomy but demand stronger coordination and standards.

– Edge analytics reduce bandwidth and latency but shift maintenance toward distributed devices.

Security and privacy sit alongside performance goals. Encrypt data in transit and at rest, minimize retention of personally identifiable information, and anonymize where possible. Access controls should map to roles, not individuals, and logs should capture who viewed or changed operational data. Resilience planning—failover routes for traffic control, manual modes for gates and pumps, backup power for key nodes—keeps services running when parts of the network fail. Equally important is equity: if signal plans optimize for vehicle throughput alone, neighborhoods reliant on walking or transit may see worse outcomes. Use metrics that reflect diverse needs, such as pedestrian wait times, transit reliability in off-peak hours, or temperature mitigation in heat-prone blocks.

Governance frameworks make these choices transparent. Publish data dictionaries and algorithmic impact assessments in accessible language, explain how feedback changes configurations, and set up independent audits for sensitive systems like enforcement. Community engagement should be ongoing, not a single workshop: pilot in a small area, measure outcomes, adjust, and scale only if targets are met. On the procurement side, specify interoperability requirements and data portability so that future upgrades do not lock the city into one path. Smart cities are not gadgets; they are institutions that learn. The most enduring programs combine careful systems engineering with humility about uncertainty and a willingness to iterate.

Implementation Roadmap, Risks, and Measurable Outcomes

Moving from aspiration to impact calls for a staged plan anchored in business value. Begin by mapping pain points—frequent failures, slow responses, regulatory fines—and linking them to outcomes such as fewer outages, safer streets, or lower energy intensity. Build a lean baseline: what does a typical incident cost, how long does recovery take, and how often does it occur? These numbers help size opportunities and guard against overclaiming. With priorities set, develop a portfolio that mixes quick wins with foundational investments: automate data entry in maintenance systems; deploy a handful of condition sensors on critical assets; pilot demand-driven control in a limited corridor; and harden cybersecurity policies before data starts flowing widely.

A practical roadmap might unfold in phases:

– Foundation: clean asset registries, unify IDs, define common data models, and set minimum telemetry standards.

– Enablement: introduce automation in scheduling and reporting, establish model governance, and train staff on tooling.

– Expansion: roll out predictive maintenance to the next asset class, broaden integrations, and add redundancy for resilience.

– Optimization: tune thresholds based on observed false alarms, refine control policies, and renegotiate service-level targets.

Risk management is continuous. Common pitfalls include underestimating data preparation effort, overlooking change management, and deploying models without clear handoffs to operations. Mitigations include staged gates for production release, “gray box” dashboards that explain model drivers, and drills that practice manual reversion. Budgeting should account for lifecycle costs—calibration, retraining, device replacement—not just initial procurement. Workforce implications are real: automation trims repetitive tasks but elevates the importance of troubleshooting and systems thinking. Training programs and clear career pathways keep teams engaged and capable.

Measure what matters and stay honest about uncertainty. Track leading indicators (alert lead time, data latency, sensor uptime) alongside outcomes (incident frequency, response time, customer complaints, resource consumption). Many programs report payback periods within a few budget cycles when targeting high-impact assets or corridors, but the range is wide and context-specific. The essential discipline is to retire pilots that do not earn their keep and scale those that do, with transparent criteria. When automation, predictive analytics, and smart-city integration reinforce each other, the result is a calmer control room, fewer surprises in the field, and services that adjust smoothly to the rhythms of daily life. That is a practical definition of progress—and a roadmap cities can own and sustain.